Error vs. Deception: Unpacking the 'Why' of Retractions (part 1/2)

A description, an example, and a tool to use (freely available) to classify retractions.

Retractions are a vital part of the self-correcting nature of science, but they often raise more questions than they answer. What went wrong? Was it an honest mistake or something more troubling? And how should we interpret a researcher’s trustworthiness after a paper is retracted?

Understanding why a paper was retracted is essential, not just that it was. The taxonomy of retraction reasons Version 2.0 builds on our earlier framework and is informed by community feedback and new developments with the Retraction Watch taxonomy.

One Database, Many Reasons

It’s important to acknowledge: Retraction Watch is the only global database that consistently tags retraction notices with reasons. But even this curated effort reveals the challenge, many retractions occur without sufficient explanation, leaving researchers and institutions in the dark. Our taxonomy doesn’t solve the data completeness problem, but it does provide a more structured way to interpret what’s available.

Four Primary Categories, Plus One

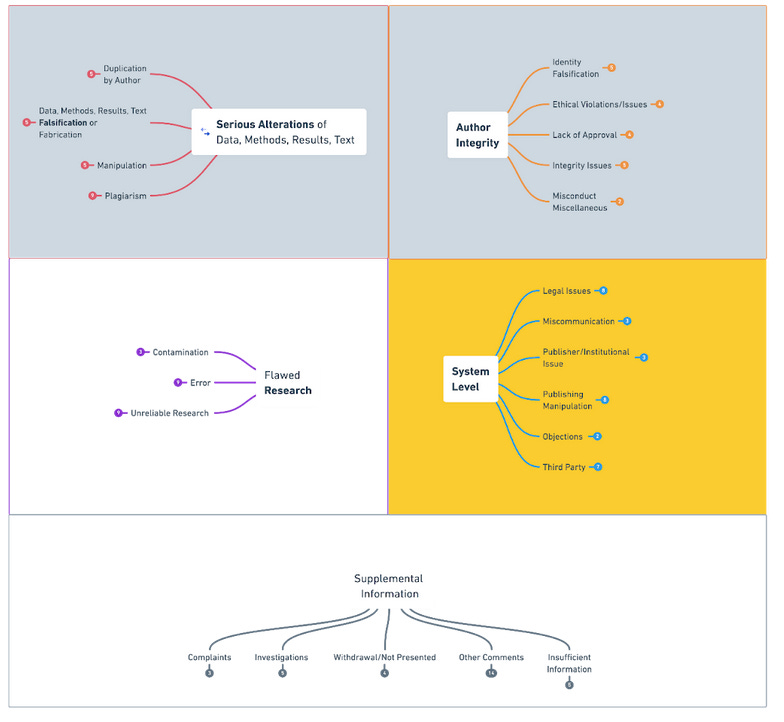

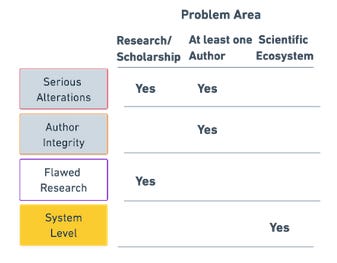

Our revised taxonomy organizes retraction reasons into four major categories, each capturing a different aspect of trust and failure in the research ecosystem:

Flawed Research – Honest errors or methodological issues, such as contaminated reagents or irreproducible results.

Serious Alterations of Data, Methods, Results, Text – Falsification, fabrication, plagiarism, and manipulations of data, methods, results, or entire text. Actions that appear to be intended to deceive.

Author Integrity – Breaches of ethical norms, such as failure to obtain IRB approval or identity falsification.

System-Level Issues – Problems caused by journals, publishers, legal disputes, or third parties that may not implicate the research or researcher directly.

A fifth, cross-cutting category, Supplemental Information, includes contextual metadata that doesn't inform judgments about trust (e.g., “investigation underway” or “author unresponsive”).

The taxonomy is freely available here: McIntosh, Leslie D.; Hudson Vitale, Cynthia; Weber-Boer, Kathryn (2025). Retraction Reasons Taxonomy v2.0. figshare. Dataset. https://doi.org/10.6084/m9.figshare.29554646.v2

Error vs. Deception: A Crucial Distinction

One of the key advances in this version of the taxonomy is the ability to distinguish retractions due to errors from those involving intent to deceive. That distinction matters deeply, even if you don’t use the research. Papers retracted for flawed research, such as statistical missteps or experimental contamination, should not be viewed in the same light as papers retracted for falsified data or fake peer review.

For example, the recent case reported by Science and covered in Retraction Watch, where a high-profile study on ‘arsenic life’ was retracted due to serious methodological flaws – specifically, apparent contamination of the microbes under study. The authors disagreed with the retraction and stood by their conclusions. And that’s still okay. Science is complex, often uncertain, and sometimes corrected without consensus. A retraction in such a case doesn’t point to misconduct—it reflects the scientific process at work.

In fact, in many error-based retractions, authors themselves flag the issue and request the retraction, a sign of integrity, not misconduct.

What This Means for Trust in Science

Understanding why a paper was retracted enables a more granular and just assessment of trust, trust in the research itself, in the researcher, and in the publishing system. Not all retractions are created equal, and treating them as such only fuels confusion and erodes public confidence.

With this updated taxonomy, we offer a tool to navigate that complexity and shine a clearer light on where trust has been lost and where it has merely been misunderstood.

Look for Part 2 tomorrow (Thursday, 31 July), where we look at…

Retraction Reasons by the Numbers

First, understand that most retraction notices include multiple reasons for the notice.

Dear Leslie,

Many thanks for your post. The taxonomy is very valuable for us because we are trying to implement a classification of retracted publications according to Retraction Watch and other platforms. I just wanted to present our database, the first specialized search engine of retractions, retracted publications and withdrawals. It is an open source that tracks retractions in multiple sources and ingests records from OpenAlex, including more than 120k publications from 2000 onward. It also ranks organizations, authors, and journals by records in the database. https://retractbase.csic.es/

Today, we are working in cleaning data, indicators, visualizations and many other things.

Regards

José Luis Ortega